My New Job Trying to Save the World

Old job: teaching communication skills; New job: Communications

I am closing my therapy practice to focus on my new job as Communications Manager at the Machine Intelligence Research Institute (MIRI), and I want to tell you the story of how that happened – but to do that, I’m going to have to go back about eight years, before I even started therapy school.

TL;DR of this article:

Awareness: an explanation of how smarter-than-human artificial intelligence could be catastrophic

We Are Losing: my personal realization that the danger was imminent

Adjustment: my grief process, followed by how I fell in love with Eliezer Yudkowsky

The World Notices the Problem: a glimmer of hope, and Eliezer gets famous

Shut It Down: the best way forward, how I’m helping, and how you can help too

1. Awareness

“Artificial Intelligence is a huge threat. Unchecked, it’ll probably kill all humans.”

It was the fall of 2015, and it was the first time I’d heard that claim. I was at a workshop about learning to think more clearly, and the crowd at that workshop was very plugged into concerns about AI. The idea that AI could be not just dangerous but apocalyptic sounded to me like science fiction – but if it were true, it would be very important. So, rather than dismissing the idea, I decided to try actually thinking about it.

I fast-forwarded in my mind to a day when superintelligence already existed alongside humans.1 There would be one or more super-smart computers that can do everything we can do, but faster and better. What would that be like? How would we interact with each other? Would we get along?

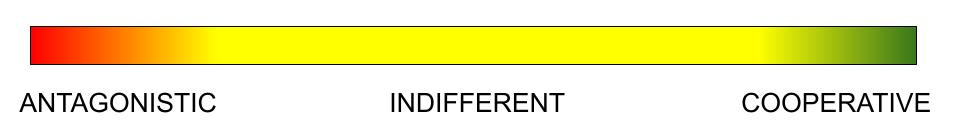

I envisioned a spectrum from “Antagonistic” to “Indifferent” to “Cooperative.” The newly born superintelligence could have any of those attitudes toward humans. The categories were fuzzy, but that was okay for a first pass at thinking about it.

It was obvious to me immediately that if for some reason the superintelligent AI was so antagonistic that it wanted us dead, then we would just lose. Just like if humanity decided that we wanted, let’s say, marmots to be extinct, we would get that job done. The marmots are not going to build a secret marmot hideout bunker. There is not going to be a plucky marmot hero who outwits the humans. Through some combination of conventional methods and engineered viruses or something, humans would wipe out the marmots.

We should definitely not build a superintelligent antagonistic AI on purpose. That would be stupid.

But it also seemed obvious that an AI would probably not turn out to be antagonistic by accident. It would have no particular reason to hate us. If it were so much smarter, it could just work around us or ignore us, go on about its alien business without regard to us. So I reasoned that the broad swath of “Indifferent” in the middle of the spectrum was the place to focus.

That’s when I started to get worried.

Anybody who’s ever written any kind of software knows that you sometimes get surprising emergent behavior in complex systems. You can end up with really unexpected side effects even when you just want something very simple – and a smarter-than-human AI would be extremely effective at accomplishing these emergent subtasks.2

You can imagine an AI that’s just trying to do something seemingly innocuous or even helpful, like build autonomous mineral-harvesting machines, or something.3 That seems like it could be very cool and useful. But it turns out that the AI is a little too efficient at building and deploying those machines, and fails to take human life and values properly into consideration along the way, with potentially catastrophic results. In that scenario, the AI doesn’t actively want to kill us, it just wants to harvest minerals and we’re in the way somehow.

It was really easy for me to picture that all going horribly wrong. And if the AI is superintelligent, then we are not going to be able to stop it because it’s always going to be several moves ahead of us.

One way I expect superintelligent AI to work is that it will try to protect itself from interference. If it anticipates that someone might try to turn it off or get in its way, it reason, “oh, if someone turns me off, then I won’t get to harvest minerals anymore, and that would be bad, because harvesting minerals is my whole deal!” So it might take steps to prevent anyone from turning it off or changing its goals or interfering with it, and it might become a little bit shifty about letting humans notice anything about it that they wouldn’t like.

I could also see that any potential disasters would probably not be small. The more powerful a system is, the more damage it can do. That indifferent mineral-harvesting system wouldn’t just be sociopathic, idly murdering the individual human who happened to be in its way as it went about its work. It could easily be genocidal: It could decide to harvest biological life for its minerals, or it could think ahead that humans might have priorities other than mineral harvesting and preemptively prevent them from interfering (by killing them all), or… well, it wasn’t hard to generate ideas.

And the AI would know that if it decided to pick a fight with us, it should not give us a chance to make any moves. In a conflict, we should expect a superintelligence to think ahead, to win decisively and immediately, before we even know there is a conflict.

So in this way, an AI that started out indifferent – it just wanted to harvest minerals! – could end up behaving exactly as though it were antagonistic, purely because it would be so efficient and effective at working toward its central goal. An “Indifferent” AI could easily also be fatal, and if so, we wouldn’t have any chance to save ourselves.

Okay, so then by process of elimination, I reasoned that we need to make sure that every superintelligent AI is pinned to the “Cooperative” side of that spectrum. We need to teach it to cherish us and help us thrive. Indifference is not enough. How could we do that?

This also seemed pretty hard. We have at least two really hard problems here.

First, we don’t actually know how to load our values into modern AI systems at all.4

Second, expressing the values that we want is itself a very hard problem. You might start out with something very simple like “don’t kill humans or let them die when you could prevent it” and end up with unexpected results, such as an AI system that races to put all humans in a persistent vegetative state so it can keep us all “alive” with the highest probability of success. You can probably program around that particular failure mode, but there will be another and then another. It just isn’t easy to say, concretely and specifically, what you actually mean when you ask the AI to be nice to humans.

So the results of my thought experiment were in. I was on board. I could see that humanity needed to solve some pretty hard problems before it would be safe to create a superintelligence. And if we didn’t solve those problems first, we were gambling with all life on earth.

And yet, in 2015, I wasn’t all that worried!

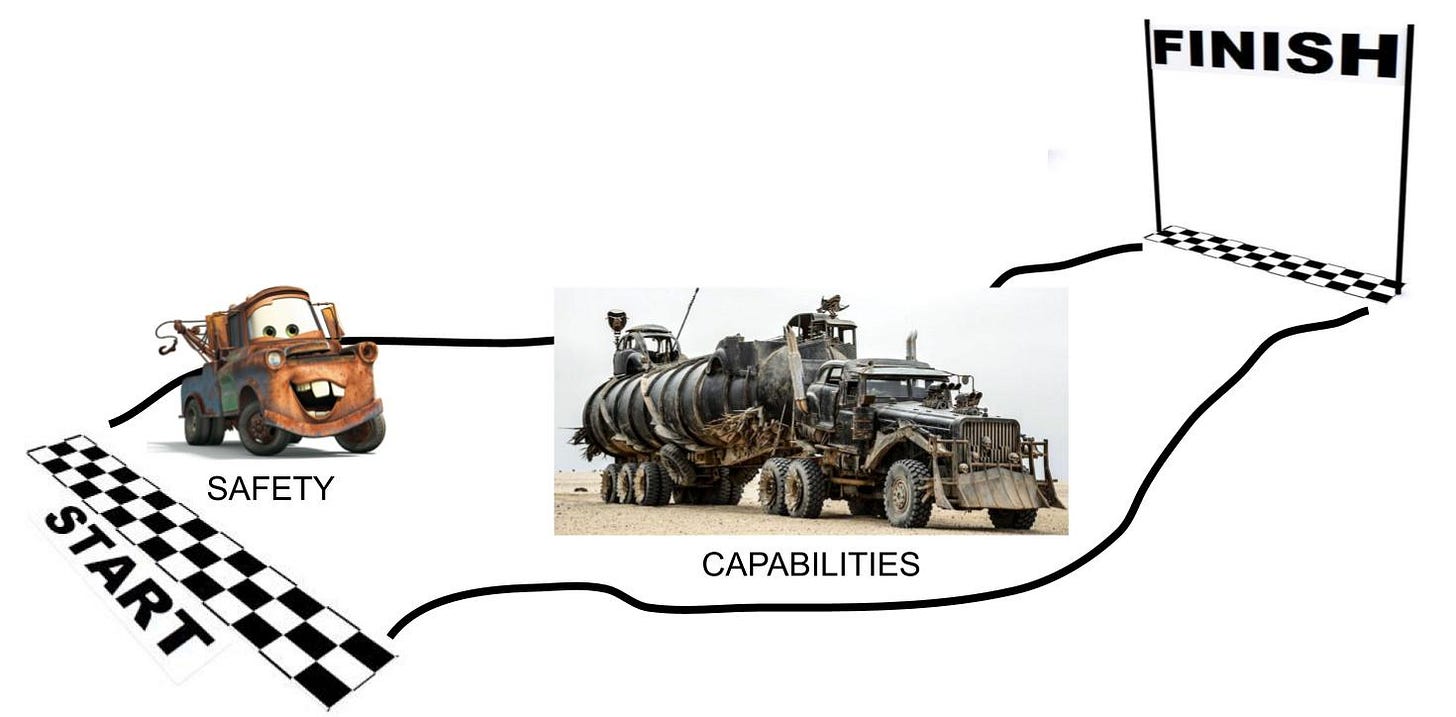

Imagine a race between Capabilities and Safety. Capabilities work is all the research people are doing to try to make AI smarter and more effective. Safety work is everything people are doing to try to shape and limit the AI’s behavior, to make it harmless or even good - to pin it to the Cooperative side of the spectrum.

If Capabilities work runs too far ahead of Safety work, then we’re screwed – because once the AI is smarter than we are, we’re not in control of the situation, and we’ve lost the chance to work on Safety.

But if Safety work runs fast enough, then we figure out how to make the AI nice before we lose control of the situation, and we’re okay.

From my point of view in 2015, things looked pretty good!

Capabilities were advancing, but slowly. We were a long way away from superintelligence. Computers were still pretty dumb. I was a software industry veteran and had spent many years running big, complex systems in production and I knew how much work it was for humans to keep those systems running. A major software service might have a team of 16 humans on opposite sides of the globe, working in shifts to fix things as they broke - and that was just at the level of that particular service. Many other teams were supporting the layers of infrastructure underlying the top-level service. These systems were as robust as we knew how to make them, and they were still incredibly needy, requiring tons of babying and coddling to stay running.

The idea that computer systems might just kick their human helpers to the curb and try to manage on their own anytime soon felt pretty far-fetched. It was like a toddler considering moving out and getting his own apartment.

Meanwhile, I was told, people were actively working on Safety. That was the first time I heard about a nonprofit called MIRI, or the Machine Intelligence Research Institute. Apparently there were a bunch of researchers there, hard at work on getting AIs to be “aligned,” or harmless and nice to humans. I was grateful to learn that people were taking the problem seriously.

So the race was real, and it mattered a lot, but it was moving in slow motion, and it seemed to me like it would probably all just work out. Humanity was up against a lot of big problems, from climate change to nuclear proliferation to pandemics. This was just one more. We needed to work on all of them, and we needed not to panic about any of them. It was covered, and there was time, or so I thought.

I didn’t think too much more about it for a long time.

2. We Are Losing

I made some good friends at that workshop in 2015, and I stayed in touch with them over the years, mostly online. Some of them were working directly on AI Safety, and a lot of them remained pretty worried about it. I continued to feel glad that they were worrying about it so I wouldn’t have to.

And then about a year and a half ago, in the spring of 2022, there was a flurry of online activity that felt really different to me. One of my friends, Duncan Sabien, made a general announcement predicting that all life as we know it will be very different within 5-10 years. Even if we didn’t have superintelligence yet, AI would just make things so weird and strange that our careers and families and social structures will shift a lot.

Was Duncan right, I wondered? I started looking around to see what was going on, and indeed, there had been several surprising-to-me advances in AI while I hadn’t been paying attention. I had vaguely noticed that AI had surpassed humans in chess and go, but I had missed the news about AlphaFold 2 and GPT-3. It was hard to tell whether the advances would keep coming or if there was just going to be a flurry of activity followed by a long lull, but these developments were noteworthy.

And around the same time, one of the leading figures in the AI Safety world, Eliezer Yudkowsky, made a pair of posts that were pretty alarming. On April Fools’ Day he posted something I initially thought was a joke, saying that MIRI’s new strategy was called Death with Dignity, because it appeared that it was too late to solve alignment; Capabilities was going to win the race. And then in June he posted the List of Lethalities, staking out 43 specific positions that, together, explain how he had arrived at the grim conclusion that Death with Dignity was the best remaining orientation to our situation. For me, this was an update that Safety was not meaningfully advancing at all.

These three posts together got my attention. I decided to take a fresh look at the problem. My whole stance, after all, had been something like “good thing the Safety researchers are on the case.” But now I was hearing from one of the major research institutes in the Safety space that while they had not given up, they did fully expect to lose. Capabilities was going to cross the finish line and create superintelligence before we were ready. Humanity was going to lose control of the situation, and the most likely outcome was that we would all die.

I looked into it, and found that my assessment from 2015 was indeed badly out of date. It no longer looked like we had decades of time to work, and it no longer looked like we’d probably figure out Safety in time. We were losing the race.

Even worse, the people working on Capabilities did not seem particularly interested in slowing down or stopping. Increasing Capabilities is incredibly exciting and wildly profitable work, and while many Capabilities researchers agree that superintelligent AI is dangerous and even potentially fatal to humanity, they mostly want to continue to forge ahead.5

My main response to this was, “Oh. Shit. That’s incredibly bad news.”

3. Adjustment

In June of 2022 I sat down again to think about what I should do about this situation.

Obviously I wanted to help, but I couldn’t immediately think of a way to do so. How do you help win a race when you’re not a runner? Working directly on AI safety is pretty hard, as it’s a deeply technical field. I do have a master’s degree in computer science and a bachelor’s in math, but it would take me several years to come up to speed and be able to contribute. Even if I spent those years, I wasn’t sure my brain still had enough plasticity to do novel work.

I’m also not sure I have the right shape of mind. I’ve distributed my lifetime skill points pretty widely. I spent 15 years working in tech in various capacities, including building and running the infrastructure behind major web services at Google.6 I left tech in 2015 and retrained to become a relationship and sex therapist. I’m also a parent to three children, I have a lot of creative hobbies, and I write.

I mastered all of those things to various degrees, but at heart, I am a generalist. I’m curious, I like novelty, I like a new challenge. I’m like that Heinlein quote about all the things a competent person can do – except I don’t actually believe specialization is for insects. I think really hard technical problems are usually solved by specialists, and I am not a specialist.

I do not think AI Alignment – pinning the AI to the Cooperative end of the spectrum – is going to be solved by a person shaped like me.

So, then, if the world seemed to be ending, and there was nothing I could directly do about it, then what?

I made a short list of practical adjustments, appropriately hedged. There was surprisingly little to do here, because we know so little about how much time there actually is. If you know for sure you have five years, you can make a lot of life trajectory decisions with that information – but we don’t know anything like that.

I made a list of resolutions like “be kind” and “don’t hold grudges” that, when written down, look like the morals of a bunch of episodes of a preachy after-school kids’ show, but that are good ideas anyway.

And, most importantly, I grieved – a lot. I am not done grieving, not at all, but the time I spent on grief last summer felt productive in some way. Rather than shying away from my pain and continually bumping back into it by surprise, I confronted it and occupied it and let it sink into me. I visualized the future as I had imagined it, and keenly felt the loss of that future. I cried the hardest when I thought about my children. I don’t know how much time we have, or how much lifespan they will end up with but, whatever it is, it’s too short.

When I had come through most of that process and felt like I’d made my adjustment, I wrote an article about it, and some people have told me that it helped them adjust too.

Meanwhile, life went on, and I tried not to forget to enjoy it. Even if there’s not a lot of time, we are here now, we are alive now, and I tried to feel the joy and wonder and splendor of that almost every day. Even while I had grave concerns about the future, that future had not yet arrived.

Late last summer I attended Duncan’s wedding. It was held at a trampoline park and it was the best wedding I’ve ever been to, though explaining all the delightful strangeness of that event is too off topic for this post. I felt a sense of community at that wedding that I’d really been missing through the pandemic years.

And, notably, I met Eliezer at that wedding. I’d been aware of him online for years and was curious to see if he was as arrogant as he seemed. He was! But he was also warm and funny and kind. So I asked him out on a date, and that went well, so I visited him in Berkeley, and he visited me in Seattle, and then he moved to Seattle, and here we are – happy, even though the world is probably ending. The last year with Eliezer has been more consistently joyful than any other time of my life, due mostly to finding someone who suits me so well. He and I are both weirdos, but we are very similar weirdos and we have found a degree of compatibility with each other that neither of us knew was possible with other humans. We spend a lot of time together bouncing increasingly ridiculous ideas back and forth and laughing helplessly.

When people think of us, they might imagine that we sit together weeping for the future and railing against the desperate plight of humanity, and not gonna lie, there’s at least a little of that.7 But mostly it’s the other thing.

Life goes on, for now, and we’re enjoying it.

4. The World Notices the Problem

Last winter, the AI landscape changed visibly and rapidly. I think most regular people had no idea that AI could ever rival humans at important, creative tasks, or they thought of smart AIs as being very far in the future. After the release of ChatGPT on November 30, followed by GPT-4 on March 14, the general public began to notice how fast AI Capabilities were advancing.

On February 20, Eliezer was on the Bankless podcast. He expected to spend the interview talking about cryptocurrency, but the hosts asked him a few questions about artificial intelligence. They were so alarmed by Eliezer’s frank description of how bad things look for humanity that they spent the entire interview on that, and then had a series of other AI experts on after Eliezer to continue exploring the issue.

On March 29, TIME ran an op-ed by Eliezer in which he advocated for a multinational agreement to halt the development and deployment of frontier AI systems more powerful than the ones we have already launched. The next day, a reporter asked the White House Press Secretary for comment on Eliezer’s claim that AI could kill us all, and she laughed it off, but a few months after that, AI had become a major item on the regulatory agenda of every economically powerful country in the world.

On April 18, Eliezer spoke on stage at the main TED conference in Vancouver.

The Overton window had shifted. Regular people were aware of AI and concerned about it, and wanted to regulate it so it couldn’t kill us.

I was stunned by all of these developments. My frame for years had been that Safety needed to go fast enough to beat Capabilities, because Capabilities were going to advance no matter what. Capabilities work is unpredictable: it moves by fits and starts. Sometimes nothing happens at all for many years, but when there’s a breakthrough, it can be breathtaking.

Quite out of the blue, a new possibility for survival had emerged. Maybe we were not at the mercy of random lurches forward by the Capabilities car in the race. Maybe we could use government intervention to force Capabilities to stop for a long time and let Safety catch up and win the race.

Here was a real glimmer of hope.

Meanwhile, behind the scenes, it was a very strange time. Eliezer had moved to Seattle in January and we were adjusting happily to having a lot more time together. Neither of us had expected, when he moved, that he was going to become a minor celebrity.

He didn’t have a good place of his own to record the Bankless podcast, so he did it in my guest room. Right before the interview, he combed his eyebrows up into mad scientist mode and then waggled them at me to make me laugh. It was a week later when I watched the video that I realized he never combed them back down!

We scrambled as more and more podcast requests came in to set up better infrastructure for recording. I helped him buy more camera-ready clothing. We debated the merits of the hats he liked to wear for interviews. People on Twitter had opinions, so he invited them to email eliezer.girlfriends@gmail.com and route their feedback through his Fedora Council.

At that time, Eliezer was managing all of his own press inquiries, and he was not really able to keep up with them all. I find it far less taxing than he does to manage a high volume of email, so I joined MIRI as a contractor and started helping with all the correspondence and scheduling and tracking for media requests.

It wasn’t just Eliezer who was drowning under the weight of media attention - the entire MIRI organization was pivoting to focus much more on communications and policy, and they needed help with all of that. The CEO asked me to expand my role and come on as Communications Manager, and I was quick to agree. I’ve been ramping up there and winding down my therapy practice ever since. It was gratifying for me finally to have something to do, a concrete way I could really help.

5. Shut It Down

So here we are in August of 2023. I do not like our odds as a species; I think we are probably all going to die, because we’re not going to coordinate well enough to do the right thing.

There are two ways we could survive.

We could survive by solving alignment very soon now. The people driving the Safety car could step on the gas and zoom past the Capabilities car and get to the finish line first. Unfortunately, no one has any great ideas here, we are absolutely not on the verge of a solution. (Frighteningly, the people who talk loudest about having a solution plan to build the AI first, and then use the AI to figure out Safety. I worry very much about how bad things could get during the time period when there’s an unaligned AI that is smarter than we are; we have concrete evidence that AIs will lie to us, so I don’t trust an unaligned AI to cooperate with us on reining itself in.)

The other way we could survive is by stopping Capabilities from advancing any further. Not forever, just for a very long time, to give us time for Safety to win the race.

I don’t know how to help with the first path, but I do know how to work on the second one. I might be able to help explain the problem and get humanity to wake up and slow down.

When I told my therapy clients I was closing my practice to take this job, they were sad to lose me as a therapist, but nearly all of them thanked me for working on AI Safety. They were newly aware of AI as an emerging issue, and most of them were worried about it. I found their response really encouraging! For so long, people inside the tech bubble have been very dismissive of AI risks; it is really gratifying that regular people are not dismissive at all.

I found my path for helping. I feel great about that. A lot of people keep asking me, though, how they can help, and I don’t think “start dating Eliezer Yudkowsky and then start working in Communications at MIRI” is an answer that’s going to scale past 10 people at most.

Here’s what I think you should do:

Think for yourself. Figure out what you believe about superintelligent AI and the future of humanity. Read. Ask questions. Try to understand.8

If you become alarmed in the process, don’t keep silent about that. Talk to the people around you. Be truth-seeking. Grapple with the questions together.

And if you find your convictions solidifying the way mine did, advocate for change. Demand limitations on AI development that will keep everyone safe. Help stop the Capabilities car from racing across the line before we’re ready. Shut it Down.

Most of all, don’t brush this off. Don’t shy away from looking at it because it’s too weird or too uncomfortable. This matters, and there is actually still time to stop and do the right thing. We’re still alive. Let’s work together to stay that way.

You might ask, will artificial intelligence ever really get as smart as humans? I think for a long time, many people had serious doubts about this. By 2015 I didn’t have that question anymore, and I think in 2023 a lot more people have come around, but probably many people still doubt it. Fair enough! Arguing that point in particular is not the point of this piece, but if enough people ask me that question I can write something else later about that.

If this point doesn’t feel obvious to you, that’s okay, you’re not alone. I was working from a lot of intuition based on many years of software engineering, including building very simple AIs in game development and running very big, complex services at Google. I’m not going to take the time here to really expand and develop the points in this paragraph but if people ask me to I might write more about it later.

I am not sure if anyone is doing this exact thing in 2023, but virtually every major corporation in the world is building AI into their corporate strategy and planning to use AI to pursue and achieve goals in the real world.

Speaking from 2023: As you may be aware, the company that makes ChatGPT has tried very hard to put guardrails on it such that it won’t give out dangerous information, but limiting the system has not completely worked. There are many documented examples of ChatGPT coloring outside the lines.

One reason it’s hard to put limitations on these systems is because of the way they’re built. Old-style software was written by humans would would write code that said “Do X, and then do Y, and then if condition C is met do action D ten times. Oh, and check if you are about to do Q, and if so stop (because Q is bad and you should never do it).”

Modern AI systems are not like that. Nobody tells them what sequence of actions to take. Instead, they start with somewhat random behavior and get ‘trained’ to incrementally behave more and more the way we want them to behave. Getting into the details of how this works is far beyond what I want to cover here, but suffice it to say that loading values in is tricky and error-prone, and even the best behaved systems such as Anthropic’s Claude system have an error rate that is unacceptably high for important safety issues.

Beliefs here are all over the place, can say more or link to resources if you ask.

I also spent a few years figuring out how to scalably measure and improve map data quality for Google Maps. It was literally my job to make the map match the territory.

People sometimes ask me what it’s like to be with someone who is so sure we’re all going to die. My answer is that my own assessment of our chances were more pessimistic than Eliezer’s until October 2022 when he convinced me that there was a way forward at all.

I apologize that there is a great shortage of easy-to-digest material on this topic; changing that is my new job, but I’ve only just started. One place to start is aisafety.info but it’s still too dense for most readers.

I was literally just thinking about you this morning and pondering that I hadn't seen a post from you in a while. All the pieces have fallen together and now it makes much more sense! I'm excited to see more from you about this topic, as I've found your previous posts about AI really informative and interesting to chew on. Thank you for continuing to pursue things you are passionate about in order to (hopefully) change the world. <3

Congratulations on a dreamy job situation! Wow firing on all cylinders.